In this blog you will be introduced to the OCI Cloud Operation aspects. I went through a course on the same recently and would like to brief on the same.

Introduction

Cloud Operation refers to managing resources on the cloud using different tools that help to gain insight and automation control to deploy applications faster. The operation tasks may involve build, configure, monitor, protect, govern and secure the cloud resources. Operation tasks can be performed either manually or can be automated. Manual operation involves logging on to the console, controlling deployments and creating central repository through scripting. Automation involves using terraform or ansible, combination of scripting and other tools to achieve scaling.

In OCI operation tasks, there are a few frequently used terms that one must get accustomed to. The list of terms is as follows :

- Idempotent – This means that a change or other action is not applied more than once avoiding duplication.

- Immutable – It is a type of infrastructure or service on which changes are never done. When time comes to troubleshoot, just replace the resource.

- Ephemeral – A term used to refer to impermanent resources or temporary resource assignments.

- Stateless – The notion that an application is constructed in such a way as to avoid reliance on any single component to manage transactional or session related information. Here immutable instances may be used for stateless application deployment.

- Infrastructure as a code (IaaC) – The process of managing and provisioning cloud resources and services through machine readable definition files, rather than physical hardware configuration file or interactive configuration tools.

The OCI Automation tools can be listed as follows :

- Application Programming Interface (API) – It is a set of clearly defined methods of communication among various components for building software. It forms the crux of cloud environment.

- Software Development Kit (SDK) – A set of tools that can be used to create and develop applications. It is an abstract layer between API and Software Development that enables you to programmatically interact with your OCI. Eg: Ruby, Python, Java

- Command Line Interface (CLI) – A client only tool used to execute commands to affect resources in the form of create, modify and delete. It is an essential tool for task automation using OCI resources. It provides the same or extended capabilities than found in console. When combined with powershell /bash scripts it can provide power automation capabilities. Direct OCI API interaction is possible.

- Terraform – It is a client only open source tool for managing IaaC/orchestration. You can think of it as a platform interpreter that reads declarative text and converts it into API calls. Immutable resources are used here.

- Ansible – A client only tool that can be used as a configuration management tool, DevOps tool and as a IaaC tool.

- Chef – It is a client/server configuration management tool.

Each of above tool has many capabilities that can be classified into :

- Programming – API, SDK

- Provisioning – API, SDK, Ansible, Terraform

- Monitoring – API, SDK

- Various other actions – API, SDK

- Multi Cloud Compatible, Provisioning – Terraform, Ansible (Non OCI specific tools)

- Automation of simple repeatable tasks – CLI

- Manage application deployment & configuration – Ansible / Chef

- Creating or destroying complex application architecture – Terraform

Infrastructure as Code

For a long time, manual intervention was the only way of managing computer infrastructure. Servers had to be mounted on racks, operating systems had to installed, and networks had to be connected and configured. At the time, this wasn’t a problem as development cycles were so long-lived that infrastructure changes were infrequent and there was a relatively limited scale of deployment. Later, however, several technologies such as virtualization and the cloud, combined with the rise of DevOps and agile practices, shortened software development cycles dramatically. As a result, there was a demand for better infrastructure management techniques. Organizations could no longer afford to wait for hours or days for servers to be deployed which is where the IaC technique was helpful in raising the standard.

At present, the scale of infrastructure is also much, much higher, because instead of a handful of large instances, we might have many smaller instances, so there are many more things we need to provision. We might need to scale up or scale down to handle a load in order to save on cost. Implementing IaC technique, you can just run a script everyday that brings up a thousand instances, and every evening, hit the same script to bring it back down to whatever the evening load is. In case of OCI, following are the three types of IaC one can make use of :

Scripting

Writing scripts is the most direct approach to IaC. Ad-hoc scripts are best for executing simple, short, or one-off tasks. For complex setups, however, it’s best to use a more specialized alternative. The OCI CLI is a unified tool that allows interaction with most service through a single command. Scripting options can be used with CLI in order to make life easier. Installation of CLI can be done using a command in the below format. After entering the program command ,i.e, “$ oci” ,specify the service name like “compute” followed by action on the component like “list” on instance and any additional switches.

$ oci compute instance list --additional parameters

Moving on to configuration, before using CLI you must provide IAM credentials with appropriate access. The credentials provided will be separated into profiles and stored in ~/.oci/config. Credentials may be input manually or by using the following setup command.

$ oci setup config

The above command will create your default profile. This is displayed as default in square brackets in config file. Multiple profiles can be specified in the same config file with different region, tenancy, compartment and other details under different square bracket for each user. During authentication, just specify the profile name “dev_compartment” as follows in order to use it.

$ oci compute image list --profile dev_compartment

Once the CLI is setup, configured and allocated the profiles and user credentials, next is to use optional configurations to extend CLI functionality. The default location and file name for the CLI-specific configuration file is ~/.oci/oci_cli_rc . The special configuration file can be used to specify a default profile, define aliases for commands and options, set default compartment per CLI profile etc. Use the following command for setup.

$ oci setup oci-cli-rc

Other helpful features are : output, query, generate-full-command-json-input, from-json that can be used as part of “$ oci” command and can reduce the effort of command execution, simplify the resulting command output.

Launching a Compute Instance :

The command parameter –generate-full-command-json-input can be used to create a template shell into which you may configure specific values. This is great for creating resource templates such as development compute instance. The following is the command used to generate the template.

$ oci compute instance launch --generate-full-command-json-input > compute_template.json

Once the template is ready, the required and optional parameters can be updated and the unused parameters be removed. After which you can simply do an OCI compute instance launch as below using —from-json and specify the JSON template. There is no need to input additional parameters from CLI.

$ oci compute instance launch --from-json file://compute_template.json

A script can be used to orchestrate several tasks. Through a bash script, you can launch a new instance, wait for it to respond to a ssh request, test the sample website. Through a single script stored in the source control area like github, the OCI compartment list, network VCN list, subnet list, private IP list, compute name can be displayed of all the concerned servers in a single region. Data backups, simple archival tasks to/from object storage can be automated using CLI with the get and put commands. In the below example, you can see multipart download(get)/upload(put), controlling the parameters or disabling it.

$ oci os object get -bn MyBucket --file My5Gfile --name MyObject --part-size 512 -multipart-download-threshold 512

$ oci os object put --bn Mybucket --file My5Gfile --no-multipart

Using the Console :

Now let us get a view of working on the console using the OCI CLI. A user can login to his tenancy in the OCI using the console with the user name and password provided. Once logged in, the screen appears as follows :

One more prerequisite to get started is to have a terminal to SSH login (Git-bash terminal used for windows) to the instance. Once this is ready, you can create a VCN by clicking on the bottom left icon in the above figure. Provide an arbitrary name, other suitable options and click on Create Virtual Cloud Network button to create your VCN. Once created, you can observe that a total of 3 public subnets are created automatically, one each in the 3 Fault Domains.

Navigate to Compute > Instances on the console. But before creating an instance, open the Git bash terminal to generate the SSH key using the command ssh-keygen . Once the key file is given appropriate name and generated (*.pub file), you can go ahead and create the the instance by clicking on Create Instance button in the console. Provide suitable name (CLI-ex) to the instance and click on Change Image Source to select the appropriate image for the instance. The rest of the settings like AD, Instance type, Instance shape, compartment, VCN, Subnet, Assign a public IP address, Boot volume, SSH Key (earlier key) should be appropriately set. Finally the Create button should be clicked to create the instance.

Next hop on to the Git bash terminal to SSH/securely login to the instance. Type the command ssh -i cli opc@Public IP address of instance. If the connection is a success, the left side of the terminal will be populated as [opc@CLI-ex ~]$. Now we can type command here to check the version of the instance using the command oci -v. Next you can type the oci setup config command, after which the user OCID and tenancy OCID will be prompted that needs to be entered by referring to information under the Identity > Users and Profile > Tenancy option respectively.

Next you will be prompted to set the location information and the RSA key. The public RSA key can be output with the command, cat oci_api_key_public.pem. The key data needs to be copied and pasted onto Profile > Add Public Key . Finally, type the following command to get an error free output in which case the instance is configured properly.

[opc@CLI-ex .oci]$ oci iam availability-domain list

In the following steps you will be introduced to commands to verify the compartment ID, create VCN, create, subnet, launch instance. To start with, navigate to Identity > Compartments and copy your newly created compartment id.

[opc@CLI-ex .oci]$ oci network vcn list --compartment-id PASTE THE COMPARTMENT ID

[opc@CLI-ex .oci]$ export cid=PASTE THE COMPARTMENT ID

[opc@CLI-ex .oci]$ oci network vcn list --compartment-id $cid

Once the compartment ID is verified, next step would be to create a new VCN followed by creating a subnet within the VCN. The following command would provide the necessary output for VCN creation.

[opc@CLI-ex .oci]$ oci network vcn create --cidr-block TYPE IP ADDRESS -c $cid --display-name CLI-Demo-VCN --dns-label clidemovcn

[opc@CLI-ex .oci]$ oci network subnet create --cidr-block TYPE IP ADDRESS -c $cid --vcn-id GRAB IT FROM ABOVE CMD O/P --security-list-ids '["GRAB THE SECURITY ID FROM ABOVE CMD O/P"]'

Now that you are ready with subnet, the next step would be to create internet gateway and update the route table as follows.

[opc@CLI-ex .oci]$ oci network internet-gateway create -c $cid --is-enabled true --vcn-id GRAB IT FROM ABOVE CMD O/P --display-name DemoIGW

[opc@CLI-ex .oci]$ oci network route-table update --rt-id GRAB IT FROM THE ABOVE O/P --route-rules '[{"cidrblock":"0.0.0.0/0","networkEntityId":"TYPE THE INTERNET GATEWAY OCID"}]'

Now that you have all this up and running, you can use query feature to find Oracle Linux Image IDs, and launch instances inside this subnet.

[opc@CLI-ex .oci]$ oci compute image list --compartment-id TYPE COMPARTMENT ID --query 'data[?contains("display-name",'oracle')]|[0:1].["display-name",id]'

The following commands will help to launch and check the status of instance.

[opc@CLI-ex .oci]$ oci compute instance launch --availability-domain TYPE AD --display-name demo-instance --image-id TYPE THE ABOVE IMAGE ID --subnet-id TYPE SUBNET OCID --shape TYPE COMPUTE SHAPE --compartment-id $cid --assign-public-ip true --metadata '{"ssh_authored_keys":"cli"}'

[opc@CLI-ex .oci]$ oci compute instance get --instance-id GRAB IT FROM ABOVE O/P --query 'data."lifecycle-state"'

If the instance is getting properly executed, the output shows as running. You can check for the public and private IP using the following command. Also connect via SSH to the instance and terminate all resources created in the lab at the end.

[opc@CLI-ex .oci]$ oci compute instance list-vnics --intance-id GRAB IT FROM ABOVE O/P | grep "ip,:"

Provisioning Tools

Provisioning tools focus on creating infrastructure. Using these type of tools, developers/admins can define exact infrastructure components. In case of terraform, a declarative tool, the end state is defined and the tool manages the rest. If you have to manually build a VCN with mutiple subnets and multiple resources, it would take more than 20 to 25 mins. However with terraform, just declare the resources needed and the tool with automatically build it within a few minutes. We just modify the terraform config file in case of any additions and the tool will automatically recognize it. Also the replication in a different region for a different account becomes easier using the same config file. Finally in case of repeatable workloads as well this is helpful since we can deploy and delete infrastructure on need basis.

Now let us look at some of the IaC best practices. Use a good IDE like vim, sublime, IntelliJ etc. Source control is important so better to use a source control repository like github or bitbucket.

Terraform code is written in the HashiCorp Configuration Language(HCL) in files with extensions .tf / .tf.json . Here we have the human readable (.tf) and machine readable (.tf.json) configuration files. The first step to using terraform is typically to configure the provider (AWS, OCI ..) you want to use. Once provider is configured you can start using the provider resources to create instances, block and object storage, network etc.

provider "oci" {

tenancy_ocid = "${var.tenancy_ocid}"

user_ocid = "${var.user_ocid}"

fingerprint = "${var.fingerprint}"

private_key_path = "${var.private_key_path}"

region = "${var.region}"

}

The above code tells Terraform that you will be using OCI as the provider and deploy infrastructure in the region mentioned. Also the OCI provider enables Terraform to create, manage and destroy resources within the IAM user tenancy. The general syntax for a Terraform resource is:

resource "PROVIDER_TYPE" "NAME" {

[CONFIG ...]

}

PROVIDER_TYPE refers to the type of resources (ex:instance) to create in the provider ,i.e, OCI. NAME is an identifier you can use throughout the Terraform code to refer to the resource and CONFIG consists of one or more configuration parameters that are specific to that resource.

A data source refers to a piece of read-only information that is fetched from the provider (OCI here) everytime you run Terraform. Its just a way to query the provider’s APIs for data. Data sources can be used to fetch data like availability domain names, image OCIDs, IP address ranges etc. Below is an example to fetch the AD name data.

data "oci_identity_availability_domains" "ADs" {

compartment_id = "${var.tenancy_ocid}"

}

Launching an instance :

Now lets build our first resource using Terraform configuration file. The definition for a VCN would be as follows:

resource "oci_core_virtual_network" "simple-vcn" {

cidr_block = "10.0.0.0/16"

dns_label = "vcn1"

compartment_id = "${var.compartment_ocid}"

display_name = "simple-vcn"

}

The code declares a VCN resource named simple-vcn in the compartment identified from the compartment OCID environment variable. Execute the following commands for the creation of Virtual Cloud Network.

$ terraform init

$ terraform plan

$ terraform apply

The terraform init performs several different initialization steps in order to prepare a working directory for use. The terraform plan command shows you what will happen when you execute your TF configuration file. The terraform apply command will try to create resources and also manage dependencies, or the pre-determined set of actions generated by a terraform plan execution. Finally, the terraform destroy command will eradicate all the resources in a proper sequence.

There are various features that can be used with Terraform inorder to better manage IaC. The features are : –target flag on terraform plan and terraform apply commands allows you to target a single/multiple resource. output command can be used to display the variables that are generated dynamically as part of creating infrastructure. Terraform modules are portable terraform configurations and module command can be used to reference to another module to create a reusable set of content. The terraform taint command is used to forcibly destroy and recreate a resource on the next apply. Terraform provisioners (ex: remote-exec) help you do additional setup and configuration when a resource is created or destroyed. You can move files, run shell scripts, and install software. Remote backend feature is used for state file management wherein the state data is written to a remote data storage like Object Storage Bucket. One more feature that can be leveraged is the Oracle Resource Manager. This can be used for state file management, stack management and access control management.

Configuration Management Tools

Also known as configuration as code, these are specialized tools designed to manage software. They usually focus on installing and configuring servers. Examples of these tools are Chef, Ansible etc. Starting with the definition of configuration management, that is a process for maintaining computer systems, servers and software in a desired, consistent state. It’s a way to make sure that a system performs as it is expected to as changes are made over time.

With the scaling of cloud resources, there are many challenges to address. Many cloud workloads are highly distributed. Common management challenges include, inconsistent execution of manual changes, time consuming deployment of applications etc. The configuration management solution for this would be to track and manage resources in different parts of the world using the right toolkit with features that should include; identify and track resources, define and apply configuration consistently, eliminate and overwrite manual changes, discover and report hardware or software configurations that exist etc.

The tool that satisfies most of the above points is Ansible. It is simple with no coding skills required, powerful as it can be used for application and infrastructure deployment and configuration management as well, no agents to exploit or manage. Ansible client does not run on windows. The best method is to deploy Ansible as a control machine on one of your OCI compute instances and have it as a central point to managing all the resources in your environment. It utilizes small modules called “playbooks” to perform command execution via remote SSH. The ansible installation command is :

$ sudo yum install -y ansible

Before starting to use the tool, you need to create a hosts file to organize the servers that will be managed by Ansible. Default file location is /etc/ansible/hosts. Also, you can run remote ad-hoc commands(refer to ex below) against one or more of your hosts as defined in the inventory file.

$ ansible ipaddress -m ping

$ ansible servergroupname -m ping

$ ansible all -m ping

Moving on to Ansible Playbooks, which is where we define or specify what is going to happen. The code is written in YAML and is human readable. This is procedural where we define the steps to be performed and is not declarative where the end state is defined. OCI Ansible modules are a set of interpreters, similar to the OCI provider for Terraform that allow us to use Ansible features to make API calls against the OCI API endpoints. This allows us to create infrastructure and apply configuration management with the same tool. OCI Ansible modules are available for download from a Github repository. Once the OCI Modules for Ansible are installed and your user credentials are configured, it is time to run a quick test. Run the below cmd.

$ ansible-playbook oci_sample.yml

The “oci_sample.yml” file consists of a code to display the summary of buckets in OCI bucket storage in a compartment. The OCI Ansible module in the code is oci_bucket_facts that is used to query information from your infrastructure and display the result on screen.

In addition to online documentation, the ansible-doc command can be used to view detailed help for each module. Example OCI modules are oci_compartment_facts, oci_image_facts etc.

Operational Activities

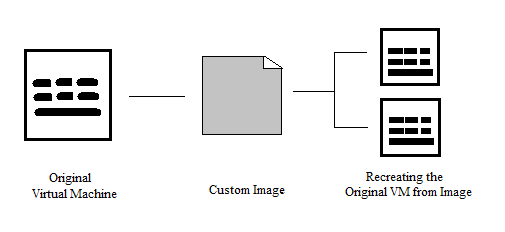

Managing OCI images involves importing an image or creating a new image. In case of migration from onpremise to cloud, whatever is present onpremise can be moved to cloud but may not be good in all cases. Sometimes there may be a legacy hardware with application that contains lot of data. In such a case creating a new instance would be better. Sometimes the user may not want to install the application, in which case the applicable image can be chosen from the partner image section that would contain preinstalled application with BYOL feature. Managing custom images would involve creating a new image with the boot volume, but if you want to attach the block volume you will need to clone and attach them to the newly created instance. Managing custom images on the console would involve the following steps.

- Navigate to Compute, select you compartment under the compartment subsection. From the compartment, launch the instance that you need.

- When the instance is created you can connect through ssh using the command ;

ssh -i oci opc@instancepublicipaddress - Once you have logged into the instance, you can patch the latest updates onto it using command ;

sudo yum update -y - Once the instance is updated, you can take the custom image and launch an instance from it. But before that if you want to create a file on the instance you can do so with the command ;

touch web_server.txt - Since you are still inside the instance on the console. Navigate to Actions > Create Custom Image. In the new window, select appropriate Compartment & the name. Navigate to Compute > Custom Images to check the status of the instance image creation.

- Once the custom image is created, create a new instance from the Create Instance button in it. Choose appropriate options to create an instance.

- As the instance from the image is created, connect through ssh using the command ;

ssh -i oci opc@instancepubicipaddress - Once connected, just check for the earlier created file on the instance using the command ;

lsthe web_server.txt file should exist. - While creating an instance you can specify the User data file/script ,i.e, the startup script that will run when instance boots up or restarts. These are helpful to install software and updates, ensure services are running within the VM.

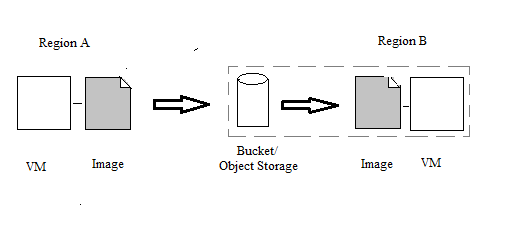

Disaster Recovery

In this section we will be dealing with disaster recovery on OCI using the cross region copy of the instance/custom image. This is implemented by exporting/importing to or from the object storage. The same is depicted in the picture below :

Now let us look at the implementation of the same on OCI console as follows.

- As a first step you can use the above web_server instance on the console. Navigate to Actions > Create Custom Image. In the new window, select appropriate Compartment & the name to create the custom image.

- Next, navigate to the DR destination region (B) by selecting the region on the top right of the console. In order to create a bucket here, click the button Create Bucket under Object Storage > Object Storage.

- Inside the bucket, you need to create pre-authenticated requests by clicking on the button of the same name and in the new window select object, enter object name, select access type. Copy the resulting URL.

- Now move back to the earlier region (A) by selecting from the top right and navigate to Compute > Custom Images. Here you can find the earlier created image, click on the 3 dotted icon next to the image name, select Export Custom Image. Click on Object storage URL & paste the earlier copied URL. Finally, click on Export Image to export to bucket.

- Once the export status shows as complete, hop over to the destination region and navigate to Object Storage > Object Storage. In the bucket that you created earlier, you should be able to see the exported image.

- Next navigate to Compute > Custom Images, click on Import Image. Populate the Name, Object storage URL, Image type, launch mode and click on Import Image.

- Once the image is imported, you can create a compute instance from the image of the migrated instance by clicking on the Create Instance button. Enter the necessary information along with SSH key pair.

- Once the instance is created, connect through SSH. Type the command :

ssh -i oci opc@instancepubipaddressfollowed by the command :ls, the web_server.txt file should exist which is same as the output that you got for the other region. - From a security standpoint, it is good to delete the URL by deleting the existing item under pre-authenticated request.

Security

As explained in my earlier blog on OCI introduction, the OCI follows the shared responsibility model. Here customer is responsible for “security in the cloud”, that involves handling user credentials and other information, securing user access behavior, strengthening IAM policies, patching, security lists, route tables, VCN configuration, key management etc. Oracle is responsible for “security of the cloud” that involves protecting the hardware, software, network and facilities that run Oracle Cloud Services. OCI security is based on the 7 pillars of a trusted enterprise cloud platform that includes; customer isolation, data encryption, security controls, visibility, secure hybrid cloud, high availability, verifiable secure infrastructure.

In case you want to meet the security and compliance requirements for your cloud resources you can do so by isolating them from other tenants, Oracle staff, external threat or isolate different departments from each other based on compartmentalization. Let us consider a compute resource, where you can opt for the single tenant or multi tenant model. In case of single tenant model, the customer gets the full bare metal server access. However in case of a multi tenant VM model each of the VM may belong to a different customer. Next let us look into the security on the networking side,

- Each customer’s traffic is isolated in a private L3 overlay network.

- Network segmentation is done via subnets that may be set as either private with no internet access or as public with public IP address.

- Customers can control the inter subnet and external VCN traffic by appropriately setting the security lists and route table rules.

- Service gateway can be used for private network traffic between VCN and object storage.

- VCN peering can be used for securely connecting between multiple VCNs.

Now that we have looked into the compute, networking related security, next we will be dealing with the security of data. Data encryption deals with the security of data at rest in block storage, boot volume, object storage, file system and data in transit. In each of the above storage types data encryption will be done by using specific keys and data transfer is done over highly secure network.

- In order to transfer the data between two regions, customer managed keys (KMS) can be used for end to end encryption ,i.e, a VPN tunnel from region to region.

- Oracle TDE encryption can be used for DB files and backups at rest. Native Oracle Net services encryption can be used for data in transit.

- The keys can be managed using Oracle Key management service that is highly available, durable and secure. It is centralized with create, delete, disable, enable and rotate facilities.

- User authentication in the form of password would be required to login to the console to access OCI resources, API signing key to access REST APIs, SSH key to authenticate compute login, Auth token can be used to authenticate with 3rd party APIs. Multi-factor Authentication provides additional security with additional authentication requirement.

- Instance Authentication/Principal is the functionality in which rather than a particular user name and password being hard coded into an instance, a dynamic group method is used wherein all instances in a group can make API calls against OCI services.

Data Backup

In case of data backup, first we will be looking into the terms RPO and RTO. These are vital and need to be defined in prior before an infrastructure is deployed.

- RPO (Recovery Point Objective) – It refers to a company’s loss tolerance ,i.e, the amount of data that can be lost before significant harm to the business occurs.

- RTO (Recovery Time Objective) – It refers to how much time an application can be down without causing significant damage to the business.

In case of block volume the data backup options are as follows;

- A complete point in time snap shot copy of block volume.

- Can be encrypted and stored in object storage and can be restored as a new volume in any Availability Domain within the same region.

- On demand one off block volume backups provide a choice of incremental (bronze, silver, gold types) vs full backup options.

- Can restore a volume in less than a minute regardless of size.

- Cross region backup possible, provided there is no customer restriction.

In case of other storage, the backup options are as follows;

- Object Storage Life Cycle Management – Define rules to automatically archive or delete data after a specified number of days.

- Database – Autonomous Transaction Processing (ATP)/ Autonomous Data Warehouse (ADW) are the auto backup options available. Even with these, its always better to take a manual backup. Managed backup and restore feature for VM/BM DB systems is present. Backups are stored in object storage or local storage for which Incremental or Full backup options are available.

- Storage Gateway Service – Storage gateway is installed as a Linux docker instance on one or more hosts in your on-premise data center. The end users can login to the application server in on-premise and connect through NFSv4 client to the docker/storage gateway that maps to the object storage/archive directly on OCI.

Cloud Scale

As discussed in the earlier OCI foundation blog, there are two types of scaling namely : Vertical and Horizontal scaling. In case of vertical scaling, scaling up/down is performed by stopping the instance first and then adding resources like CPU, RAM, Storage. Vertical scaling is supported for block volume and boot volume. The vertical scaling can be done in three ways;

- Expand an existing volume in place with offline resize.

- Restore from a volume backup to a larger volume.

- Clone an existing volume to a new, larger volume.

Once the boot volume size is increased, the partition should be resized as well. The auto-resize is done as follows; Provide a cloud-init userdata script at provisioning time that includes the growpart, gdisk, reboot to automate the process for Oracle Linux and CentOS.

DB systems provide the ability to vertically scale with no downtime for the VM, BM and Exadata type of machine.

In case of horizontal scaling/autoscaling, scaling enables you to automatically adjust the number of compute instances in an instance pool based on performance metrics such as CPU or memory utilization. The order of adjustment starts from AD to Fault Domain and finally the instances. Scaling of instances is cost dependent. The rules of scaling is as follows;

- The metric that triggers an increase/decrease in the number of instances can depend on CPU or memory utilization.

- Scaling rules depend on thresholds that the performance metric must reach in order to trigger a scaling event. The metrics must be carefully set.

- The cool down period value gives the system time to stabilize before scaling.

A load balancer when attached to an instance pool configuration would function as follows during auto-scaling; on scale out new nodes would automatically be added and on scale in terminated nodes would automatically be removed. In a autonomous DB there are two scaling options; ondemand that is based on choice and autoscaling.

Cost Management

OCI cost management is all about creating and monitoring budget, accessing and understanding usage reports, service limits and compartment quotas. Let’s begin with the cost analysis tool;

- Can be accessed from the console through Governance and Administration > Billing , click on Cost Analysis.

- A visualization tool that help understand spending patterns at a glance.

- Costs can be filtered by tags, compartments and date.

- Only administrators group members can use cost analysis.

- Trend lines show how spending patterns are changing.

Next we shall be looking at the OCI Budget. While creating a budget, the minimum fields to be entered are budget scope, target compartment if compartment is selected as scope, monthly budget amount. Others fields are optional. Some of the important points about budget are as follows;

- Track actual and forecasted spending for the entire tenancy or per compartment.

- Set alerts on your budgets at predefined thresholds to get notified.

- View all your budgets and spending from one dashboard.

- To use budgets, you must be in a group that can use “usage-budgets” in tenancy. IAM policy should be set for accountants to inspect/access usage-budgets.

- All budgets are created in the root compartment, regardless of the targeted compartment.

A usage report is a breakdown of the consumption of your OCI resources such as the compute, networking, storage, etc where you get a granular report in CSV format. These reports are generated in another tenancy and stored in an oracle owned object storage bucket. User needs to set up a cross tenancy IAM policy to access usage reports. The reports can be downloaded by accessing Governance and Administration > Billing ,select Usage Report and click the report to download.

Last but not the least, we shall be looking into the OCI Service Limits and Compartment Quotas.

- On the console, navigate to Governance > Limits, Quotas and Usage.

- When signed up for OCI, a set of service limits are pre-configured for your tenancy.

- The service limit is the quota or allowance set on a resource, that can be increased by submitting a request.

- Compartments quotas are somewhat similar to service limits but are set up administrators unlike oracle in case of service limits.

- Quotas give a better control over how resources are consumed by letting you allocate specific resources to projects or departments.

Leave a Reply